Problem definition

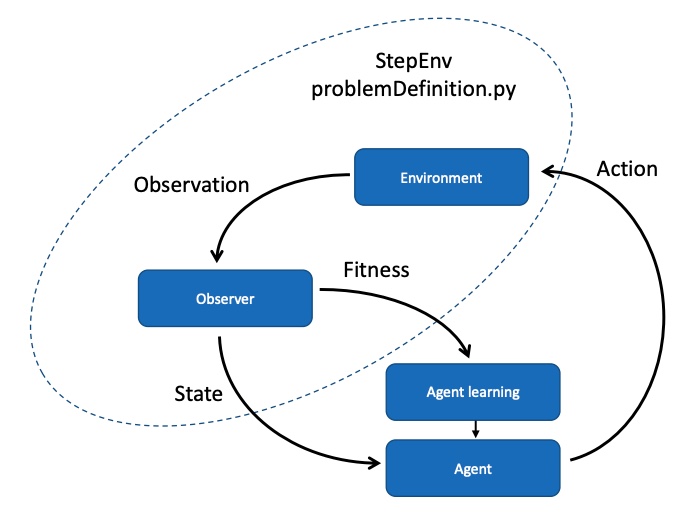

eCode software performs a search for a program that optimises a fitness function. The fitness function is calculated in a python file called problemDefinition.py and using this file the search can be configured to solve a wide range of problems. The problem definition file has the following responsibilities:

· Manage an environment for each individual

· Feed program inputs to evolve

· Receive program outputs from evolve

· Calculate the change in fitness function

Using the problem definition file, eCode can be setup to perform in a manner similar to reinforcement learning or similar to supervised learning. The diagram shows a reinforcement learning case.

the StepEnv python function in problemDefinition.py is responsible for simulating the environment, observing the environment and creating a fitness function. ecode software will accumulate the fitness values and manage the learning of the agent. In our case the agent is a virtual machine running linear software that has been learned. The learning process will either maximise or minimise the accumulated fitness based upon configuration settings in config.txt.

Note that the process in Figure 1 is performed in parallel for all individuals in the population being evolved. The functions in problemDefinition.py use a python object pEnv to contain the state of the environment for each individual. This pEnv state is created by the function InitialiseEnv but is passed back to eCode. Every call to StepEnv receives this pEnv state which is unique to each individual in the population.

The eCode software evolution process is illustrated in the diagram. The right-hand side of this diagram shows how the python functions in the file problemDefinition.py are called.

The learning process accumulates the fitness function over multiple trials (NUM_TRIALS_PER_GENERATION). Each trial consists of a number of steps (NUM_STEPS_PER_TRIAL). These values are configurable in config.txt.

Problem definition file functions

GetActionSize

This function is called once only when evolve runs. It is used to inform evolve of the number of values expected in the action array. This is the number of output variables from the program that is being evolved. The OpenAI gymnasium cheetah example version of GetActionSize is:

ACTION_SIZE = 6

def GetActionSize():

return ACTION_SIZE

GetStateSize

This function is called once only when evolve runs. It is used to inform evolve of the number of values expected in the state array. This is the number of input variables into the program that is being evolved. The OpenAI gymnasium cheetah example version of GetStateSize is:

STATE_SIZE = 18

def GetStateSize():

return STATE_SIZE

Note that we have 18 observations from the cheetah OpenAI Mujoco model as we are creating this environment with the optional additional cheetah position as the first observation. We pass the entire observation through to evolve as the state.

InitialiseEnv

This function is called once per individual within the population during the initialisation phase of evolve. It has no arguments. It returns python object that references the new environment created for this individual. The OpenAI gymnasium cheetah example version of InitialiseEnv is:

def InitialiseEnv():

env = gym.make(“HalfCheetah-v4”, exclude_current_positions_from_observation=False)

observation, info = env.reset()

return env

In this case, we include the position of the cheetah in the observation so that we can use it later when calculating fitness.

ResetEnv

This function is called once per individual within the population to reset the environment between trials. It has one argument which is the individual’s environment to be reset. The OpenAI gymnasium cheetah example version of ResetEnv is:

def ResetEnv(env):

observation, info = env.reset()

return

StepEnv

This function is called for every individual within the population at every step of the environment. A step could be a training data example, or a step could be a simulated step within an environment. It has four arguments, the first of which is the individual’s environment to be reset. The second argument is the action array that contains the set of outputs from the learned program to be applied to the environment. The third argument is the number of cycles of the virtual machine required to produce this action. The fourth argument is the index of the individual for which the step action is being called. The OpenAI gymnasium cheetah example version of StepEnv is:

def StepEnv(env, action, cycles, individualIndex):

observation, rewrad, terminated, truncated, info = env.step(action)

state = observation

fitness = observation[0]

return state, fitness

This steps the OpenAI gym using the provided action. It also calculates a fitness value (in this case observation[0] which is the position of the cheetah. And finally we pass the new state and the fitness back to evolve. Note that we are simply passing the observation as the state in this example.

More complex fitness functions and states are possible.

The learning algorithm will attempt to maximise the fitness function (it is also possible to minimise via a configuration option in config.txt) which will train the learned program to move the cheetah as far to the right as possible within the simulated time.

The reason for passing the number of cycles take by the virtual machine is to enable this to be built into a fitness function if necessary. This would enable the learning algorithm to find the minimum size program to achieve the desired goal. However, this feature should be used carefully as minimising the program size too early in the training process can reduce diversity in the of behaviours in the population and slow, or even halt, convergence.

The reason for passing the individual’s index is to enable persistent data in the problem definition file to be stored on a per individual basis and to be used in calculating fitness or state values. For example, in the case of the cheetah, a previous x position could be stored on a per individual basis to enable the calculation of velocity for use in fitness function or state.

CloseEnv

This function is called once per individual within the population to close the environment. It has one argument which is the individual’s environment to be closed. The OpenAI gymnasium cheetah example version of CloseEnv is:

def CloseEnv(env):

env.close()

return

Note that if evolve is killed during training, as suggested in the suggested usage section above, this function will never be reached. Instead the environments will be closed when the operating system kills and clears up the evolve process.